Google’s Gemini push reset expectations for consumer chatbots, but the center of gravity for serious work has shifted again. Anthropic’s Claude models are now the default choice for many developers and teams who care less about flashy demos and more about shipping code, running projects, and keeping data safe. The race is no longer about one model ruling everything; it is about which system quietly runs the workday, and on that front Claude has pulled ahead.

The change shows up most clearly in how engineers talk: Gemini is still part of the stack, but Claude is the tool they trust when a bug threatens a Friday release or when a legal team needs a careful contract review. Benchmarks, developer rankings, and even stock-market reactions now point in the same direction, showing Anthropic’s system moving from “interesting alternative” to the leading AI for real work.

The end of the Gemini-first era

For a while, Google Gemini symbolized big-tech strength in AI, backed by Google Search, Android, and Workspace. Even now, executives highlight how Gemini adoption has surged and how it helps Google compete with Microsoft and other rivals. Yet popularity is not the same as being the best tool for heads-down work, and that is where Gemini’s early lead has started to erode.

Recent reporting spells this out bluntly, stating that Google Gemini is no longer the top option for getting real tasks done and that Anthropic’s Claude now wears that crown. That shift reflects what I hear from teams who have moved complex workflows, like refactoring large Java services or drafting financial reports, away from Gemini and toward Claude because they see fewer hallucinations, better structure, and more reliable follow-through on instructions.

Why Claude is now the workhorse

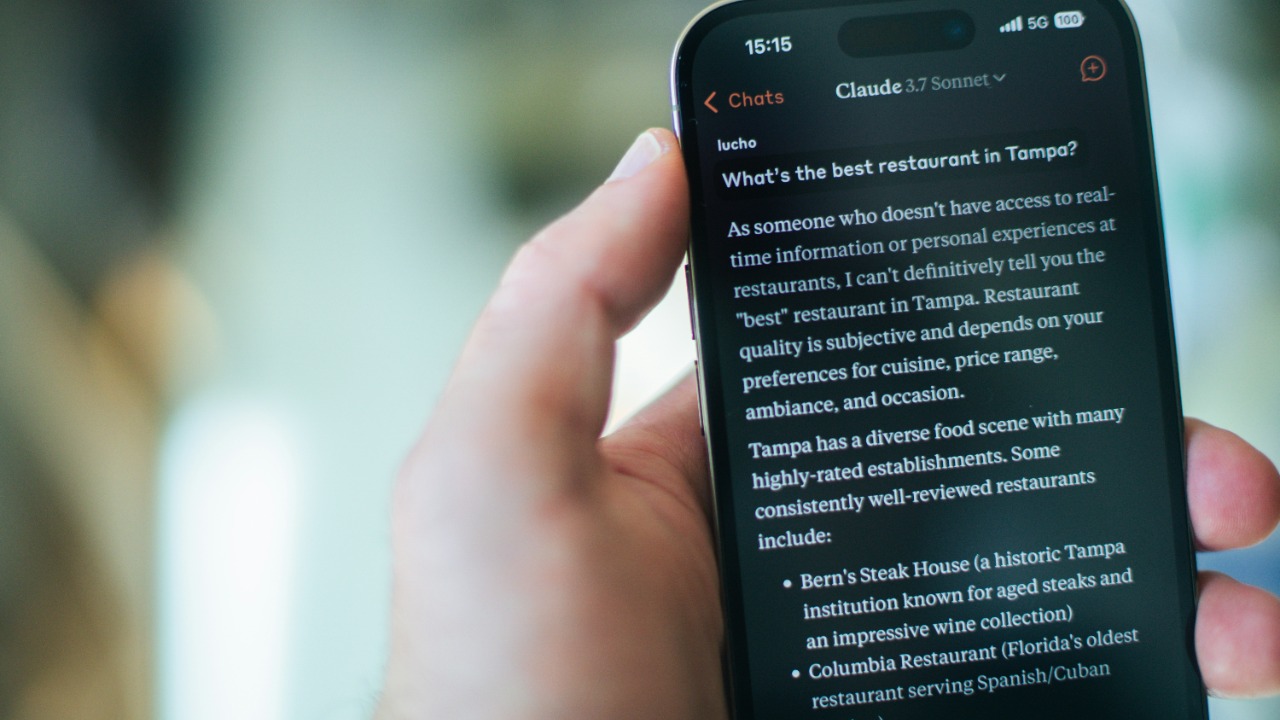

The core reason Claude is winning is simple: it behaves more like a senior colleague than a chatty assistant. From its early days, Headed toward direct competition with Open AI’s ChatGPT, Claude focused on careful reasoning and safety, which helped it grow into a staple for professionals and creators who cannot afford sloppy mistakes. That focus has only deepened as Anthropic rolled out more capable generations.

Developer rankings echo the same story. In recent Jan power tables, Claude models jumped near the top for coding tools, and engineers now talk about Claude as a default part of their stack rather than a niche experiment. One viral clip even described how, While ChatGPT still gets most of the hype, Claude is quietly becoming the standard for complex logic and development work, a sentiment I hear echoed in private Slack channels and internal engineering forums.

Coding, agents, and the Claude advantage

The biggest shift is happening in software development, where Claude has moved from “handy helper” to the engine behind serious engineering tasks. Claude Opus 4.1 was an early sign, described as Anthropic’s most advanced model for complex reasoning and software engineering challenges, and it laid the groundwork for even more capable releases. The current Claude Opus 4.5 is described as “The Coding and Safety Leader,” and that is not marketing fluff; it reflects how often developers now reach for Claude when they need a model that can both fix a gnarly concurrency bug and avoid leaking secrets into logs.

Independent comparisons back this up. One Jan analysis of Claude, Gemini, and Grok framed 2026 as a time when no single model dominates, but it still singled out Claude as the backbone of enterprise refactoring work, while Gemini and Grok play more specialized roles. Another technical breakdown noted that They see Claude Sonnet 4.5 as the winner for complex logic or system architecture, which lines up with what I hear from teams using it to design microservice boundaries or reason about distributed systems.

Claude Code vs Gemini Code Assist on the shop floor

When you drop down from model names to actual tools, the difference becomes even clearer. Claude Code has been dissected as a new kind of dev tool that is built for long-running, multi-step software tasks rather than just autocomplete. It is wired to understand repositories, orchestrate changes, and act more like a junior engineer who can keep context across many files and commits.

Google’s answer is Gemini Code Assist, which fits editor-centric workflows with clear diffs and incremental approvals, while Claude Code fits terminal-driven projects, orchestration, and long autonomous tasks. In practice, that means a front-end developer working inside Visual Studio Code might be happy with Gemini Code Assist, but a platform team running long refactors through command-line tools is more likely to lean on Claude Code for its ability to keep going without constant human nudges.

Agents and autonomy: where Claude jumps ahead

The most dramatic sign of Claude’s rise is its move into agentic work, where the model does not just answer prompts but actually carries out tasks on your computer. A recent update turned Claude into an autonomous desktop agent that can execute complex, multi-step tasks, not just answer isolated prompts. You describe an outcome, like “prepare a board pack from last quarter’s metrics,” and the system operates on your files, breaks the work into steps, and runs through them while you stay in control.

This push toward autonomy is not a surprise if you look at Anthropic’s roadmap. During one visit, observers reported that Looking ahead, Anthropic is setting its sights on autonomous AI systems capable of carrying out complex tasks on their own, even shrinking a week-long human task to mere seconds. That ambition is now visible on real desktops, where Claude agents handle chores like reconciling invoices or triaging customer support tickets without constant supervision.